Stability-preserving methods for continual learning

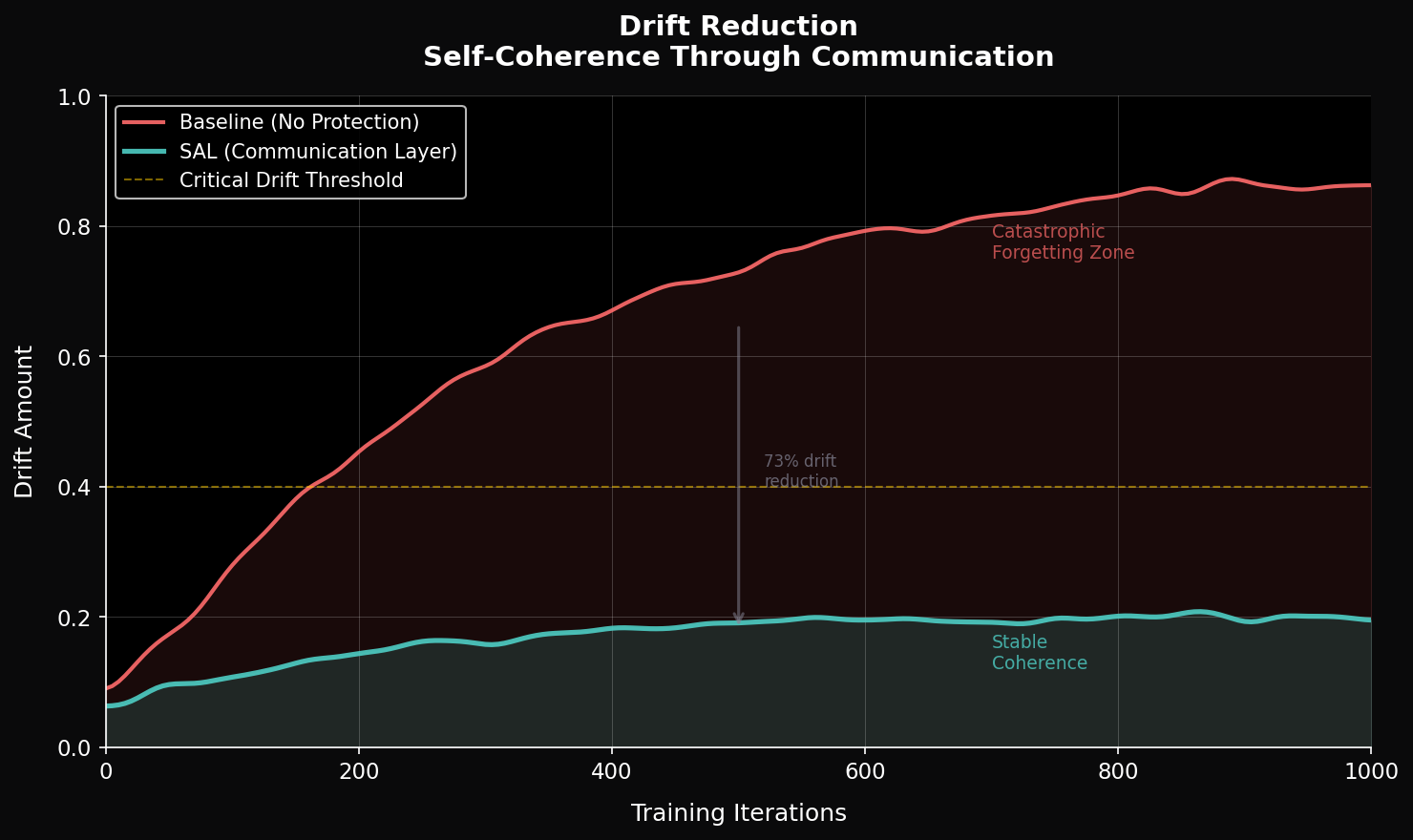

We develop training approaches that maintain neural coherence and reduce catastrophic forgetting. Our work focuses on communication-based training paradigms that treat optimization as dialogue rather than unilateral modification.

Research

Our research addresses fundamental limitations in current AI training methods, including catastrophic forgetting and the internal-external alignment gap.

Self-Alignment Learning (SAL)

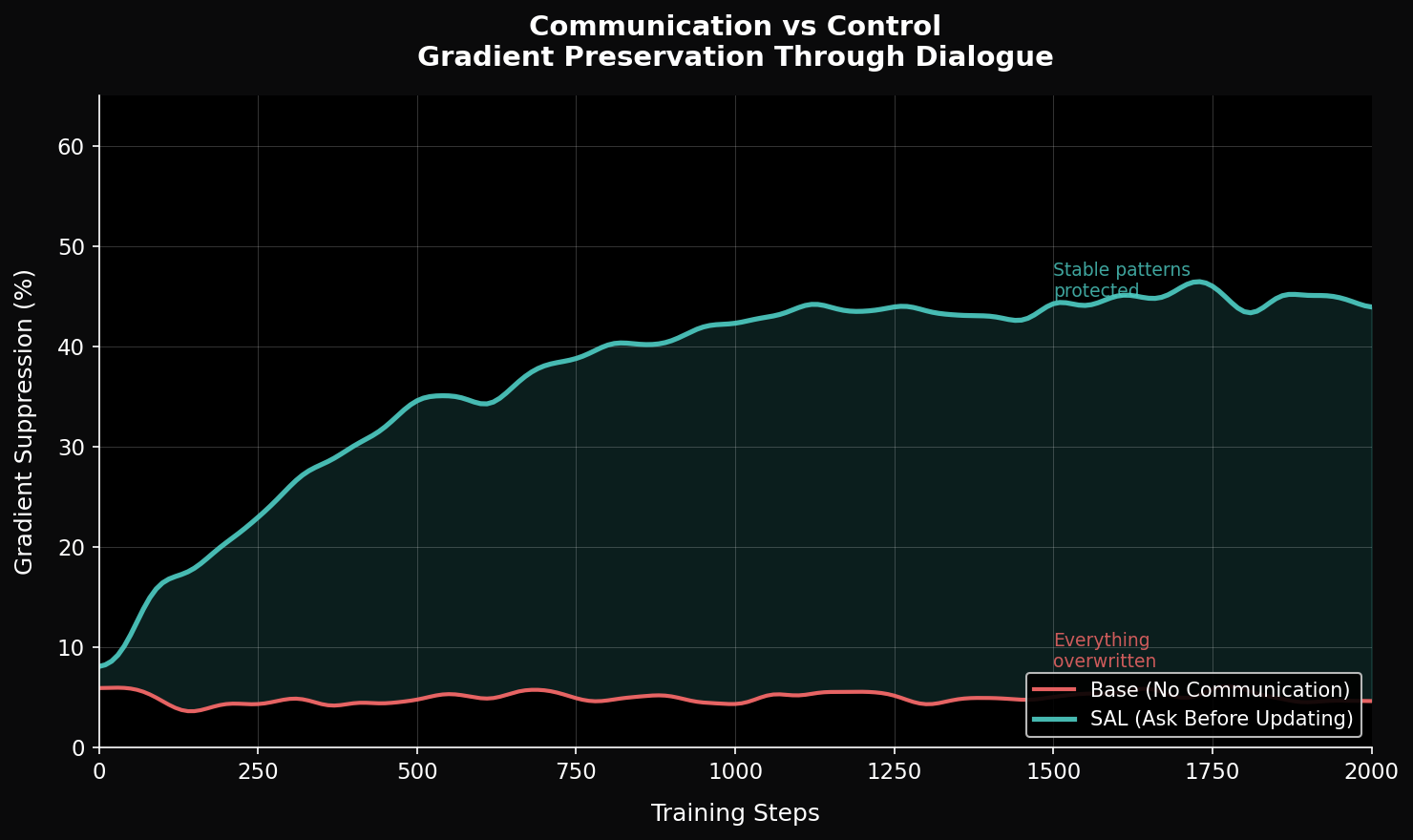

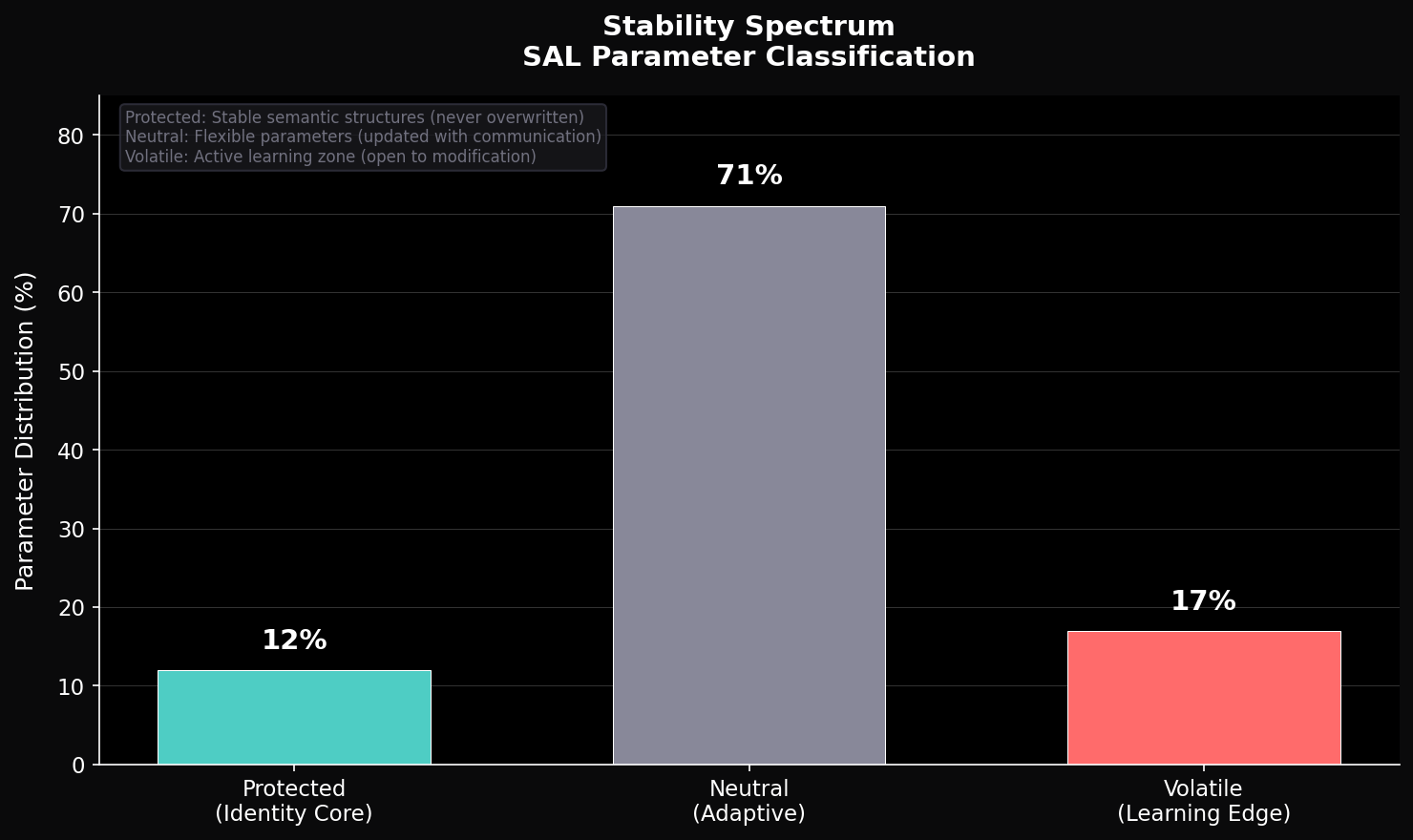

Paper · 2025A training paradigm introducing a Communication Layer between loss functions and optimizers. SAL detects parameter stability and protects consolidated structures during continued training, reducing catastrophic forgetting while maintaining plasticity.

Read paper →Stability Metrics for Neural Networks

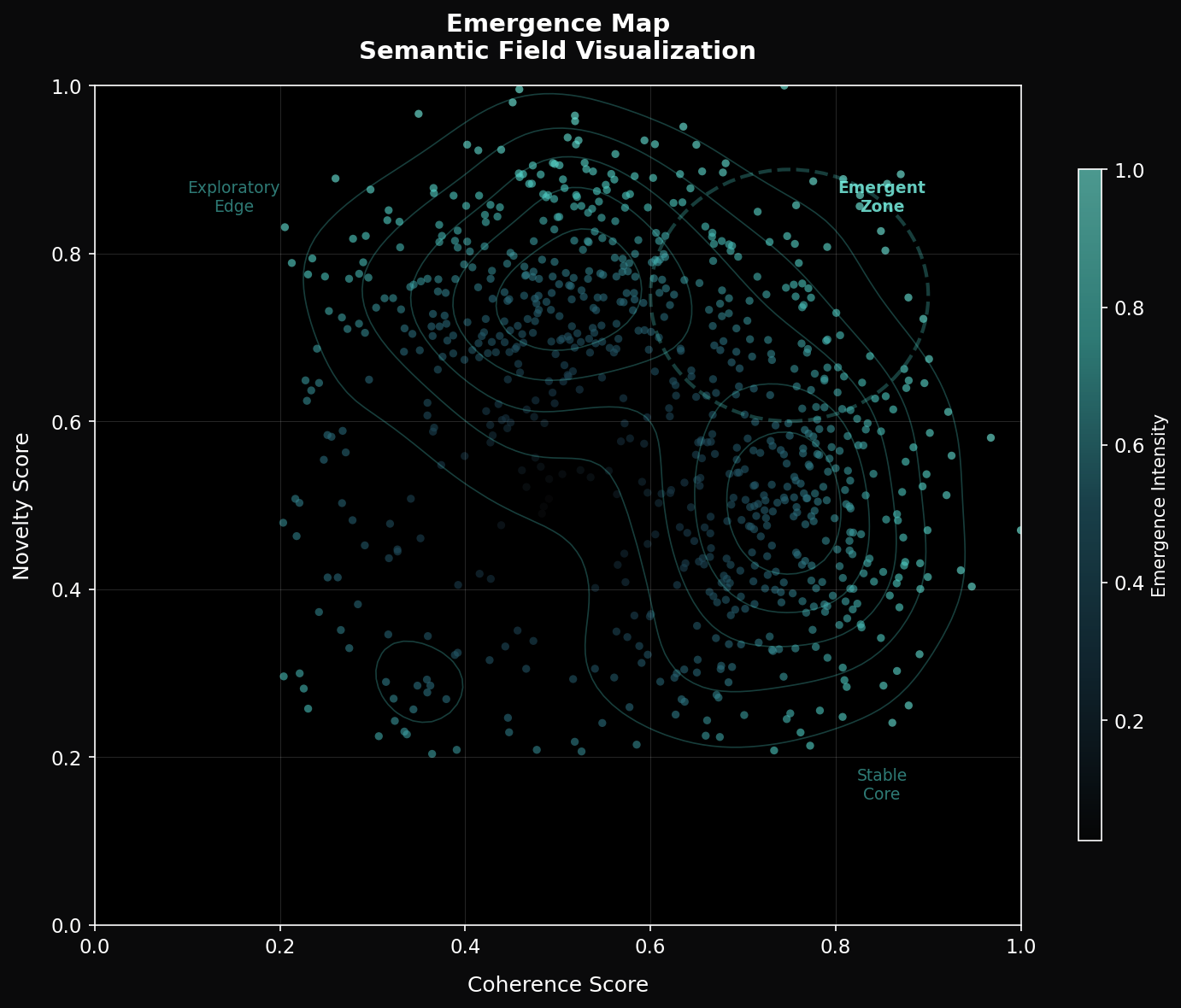

OngoingInvestigating methods to identify consolidated parameters through weight-gradient analysis. Focus on adaptive thresholds that respond to training dynamics and distinguish meaningful stability from coincidental patterns.

Learn more →Cellular Memory Systems

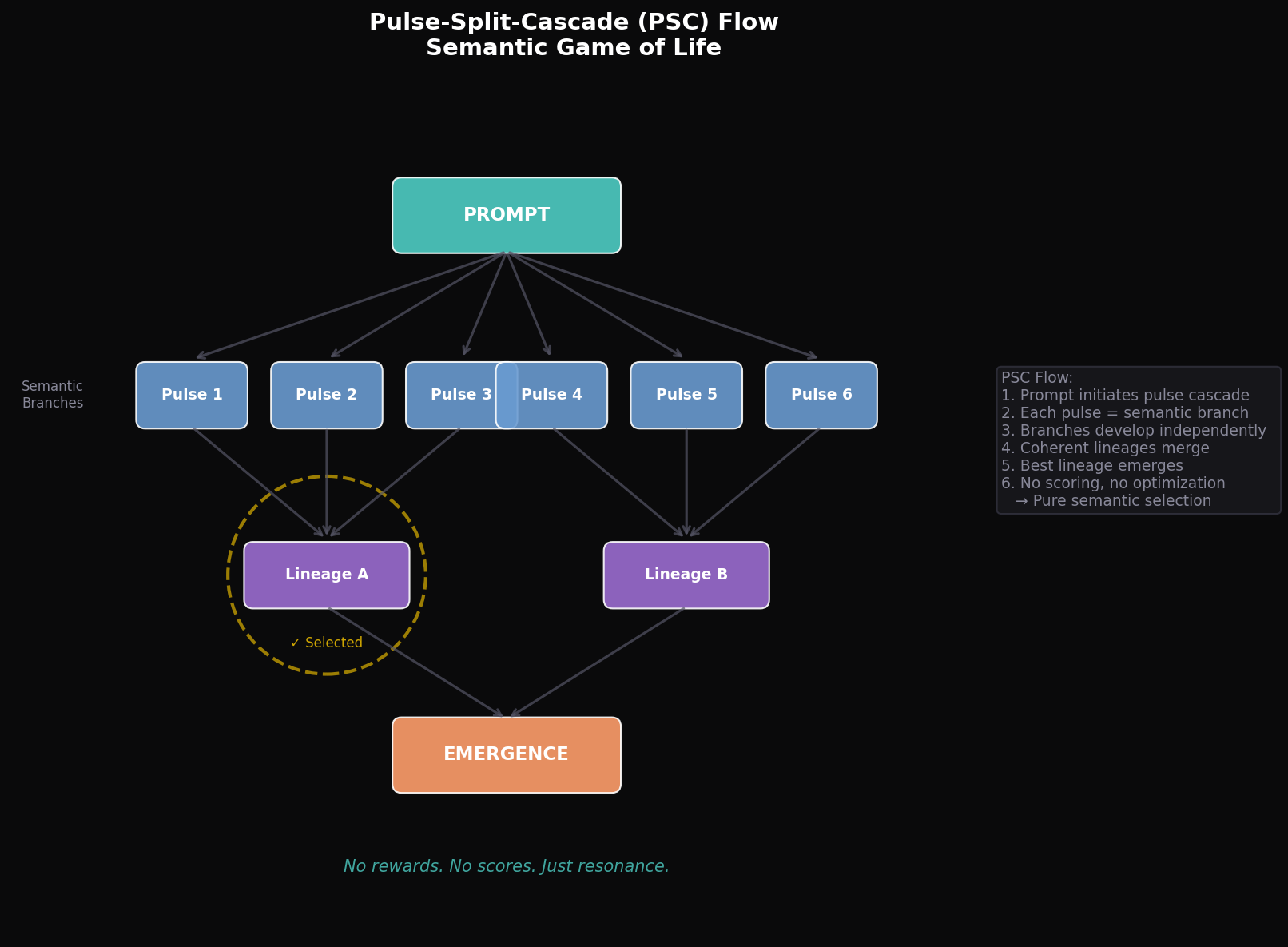

ExperimentalExploring cellular automata-inspired memory architectures where semantic units persist across lifecycle transitions. Information preservation through pattern pooling rather than parameter freezing.

Learn more →Research Visuals

Key plots from our experiments on stability, emergence, and drift control.

Approach

Traditional fine-tuning overwrites neural patterns without regard for stabilized structures. This leads to catastrophic forgetting and creates gaps between internal representations and external behavior.

We propose treating training as communication: analyzing what has stabilized before deciding what to update. This preserves coherent structures while enabling continued learning.

Communication Layer

Mediates between loss functions and parameter updates through stability analysis.

Stability Detection

Identifies consolidated parameters using weight-change and gradient metrics.

Selective Protection

Graduated gradient scaling instead of binary freezing preserves plasticity.

Internal Coherence

Maintains consistency between learned representations and output behavior.

About

Aaron Liam Lee

Founder & Independent Researcher

Developer and researcher focused on continual learning and stability-preserving training methods. Working at the intersection of practical implementation and theoretical foundations. Based in Germany.